Training Pipeline

So far, we have used uv and Supabase. Now, we’ll move on to selecting a model, defining hyperparameters, tuning it, and performing predictions while tracking everything using MLflow.

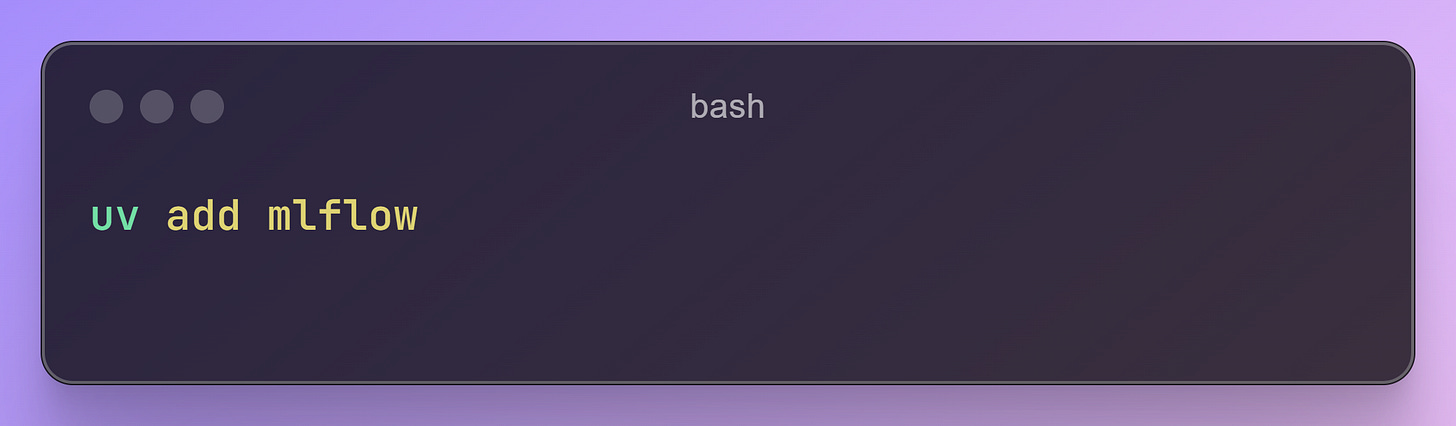

Install Essential Libraries

To begin, install MLflow:

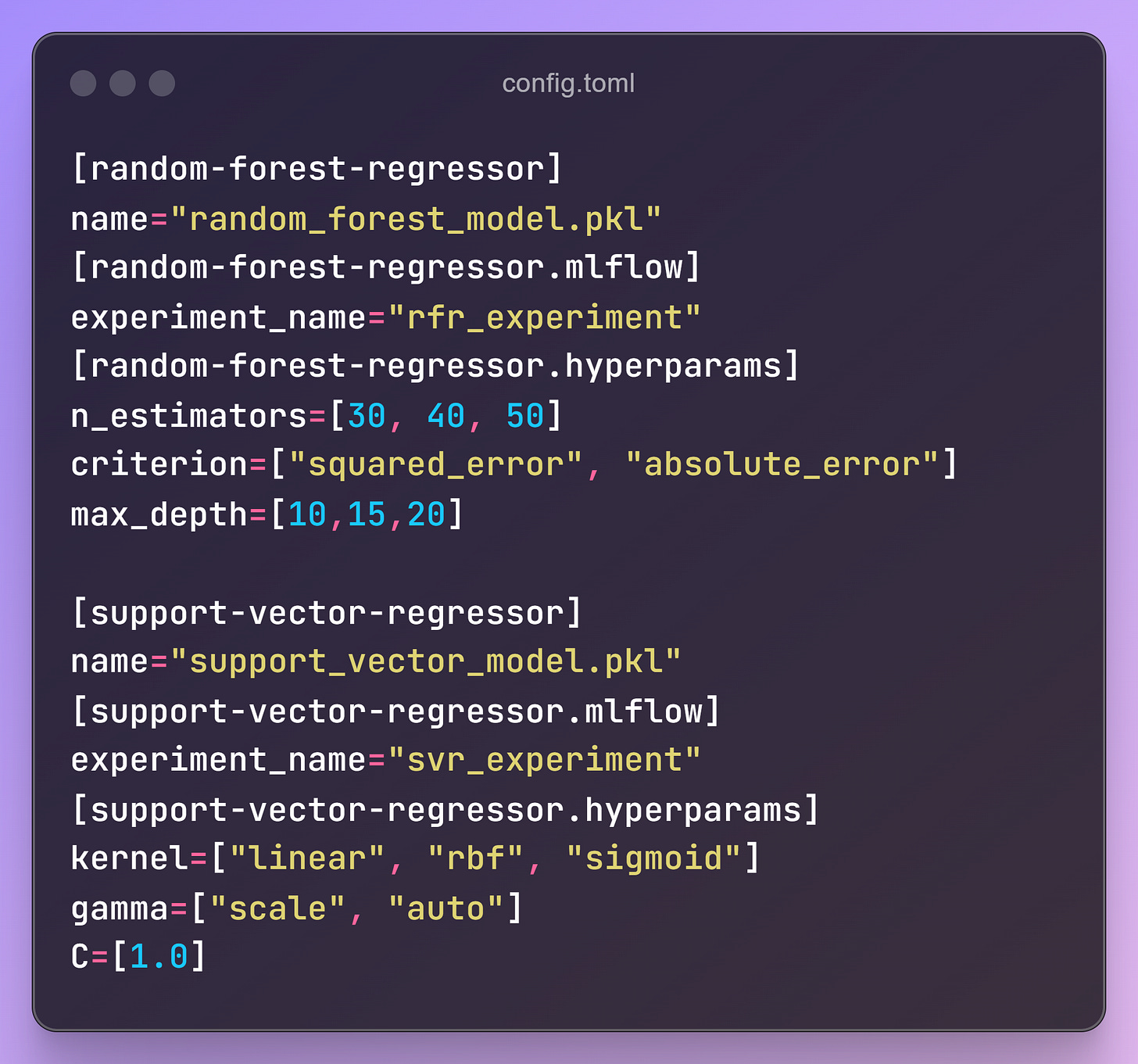

Define Model and Hyperparameters

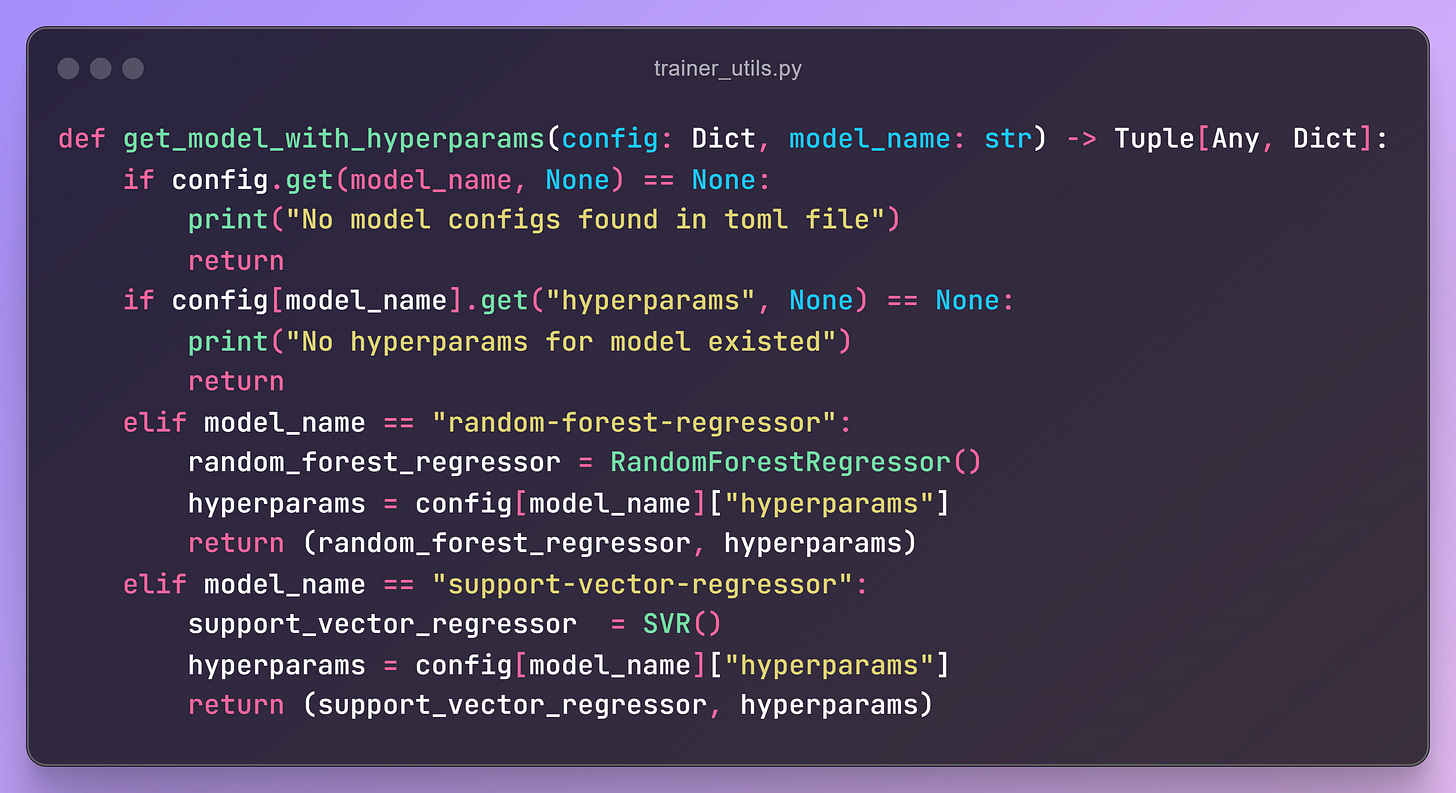

Before starting the tuning and training process, select your model and its hyperparameters. Store these configurations in a centralized file, allowing for flexibility and scalability.

For example, include a dictionary where each key corresponds to a model name, and its value contains the hyperparameter ranges. You can define as many models and parameters as needed.

This configuration enables dynamic selection of the model by specifying its key. A script will load the selected model along with its parameters and return them for further use in tuning, training, and tracking with MLflow.

Note: Inside the training class, we are calling our feature_engine which is already returning preprocessed data. We can use it for training purpose.

Tuning and Experiment Tracking

Steps for tune_and_predict Function

Split Data:

Separate the training and testing data into features and target variables.Set Up MLflow Experiment:

Create or set an MLflow experiment, naming it based on the model being trained.Initialize Tuner:

UseGridSearchCVto initialize a tuner estimator, passing the model and hyperparameters returned by the configuration script.Train the Model:

Fit the model using the preprocessed data from thefeature_enginepipeline and make predictions.Log Metrics and Models:

Use

mlflow.log_metricsto log evaluation metrics.Save the trained model using

mlflow.log_modelalong with theinfer_signaturefor reproducibility.

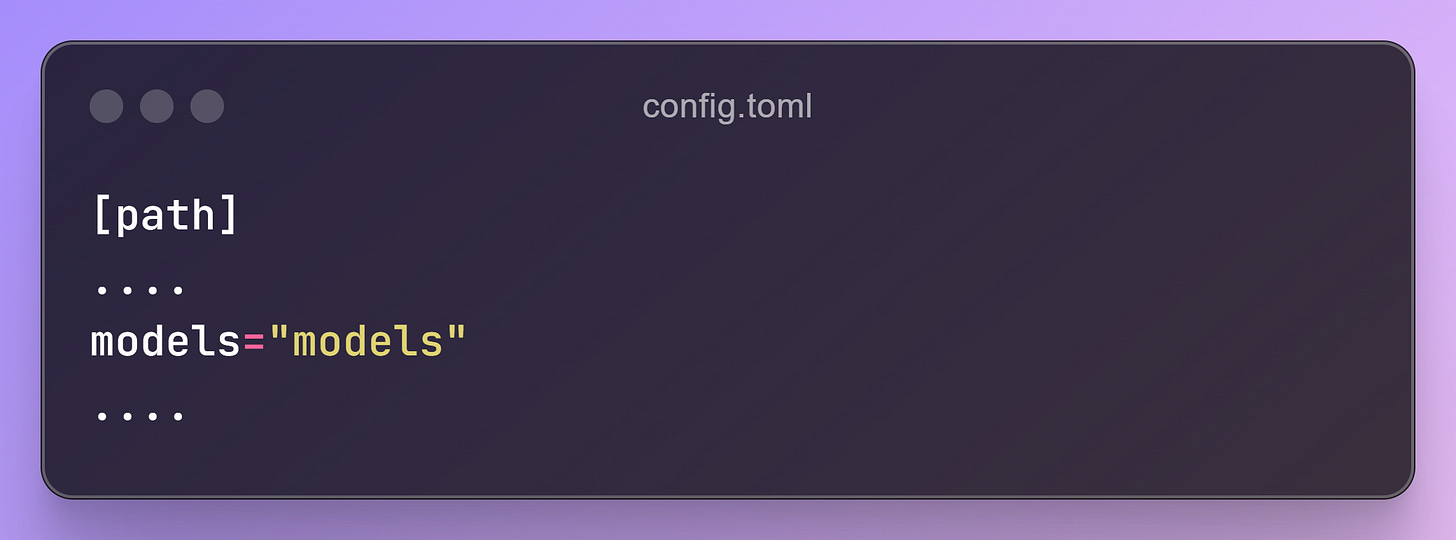

Add Model Paths to Configuration:

Update the configuration file to include paths for saving trained models.

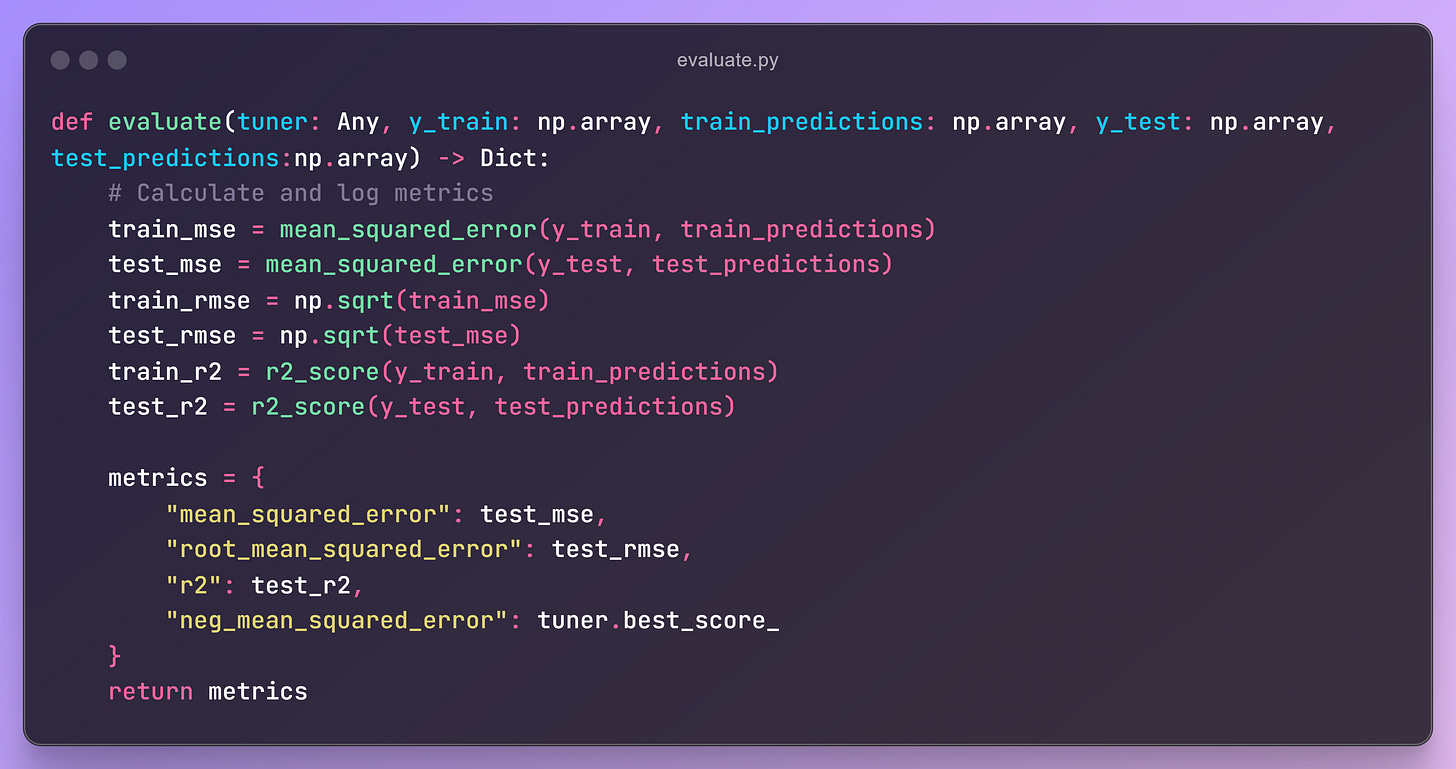

Evaluation Metrics

For evaluation, the evaluate function calculates metrics by comparing actual outputs with test predictions.

The evaluation script will be written in evaluate.py and include common metrics like accuracy, precision, recall, F1-score, or RMSE, depending on the problem type.